1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

| import os

import numpy as np

import google_auth_oauthlib.flow

import googleapiclient.discovery

import googleapiclient.errors

from googleapiclient.errors import HttpError

import pandas as pd

import json

import socket

import socks

import requests

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/45.0.2454.101 Safari/537.36'}

socks.set_default_proxy(socks.SOCKS5, "127.0.0.1", 10080)

socket.socket = socks.socksocket

scopes = ["https://www.googleapis.com/auth/youtube.force-ssl"]

def main():

os.environ["OAUTHLIB_INSECURE_TRANSPORT"] = "1"

api_service_name = "youtube"

api_version = "v3"

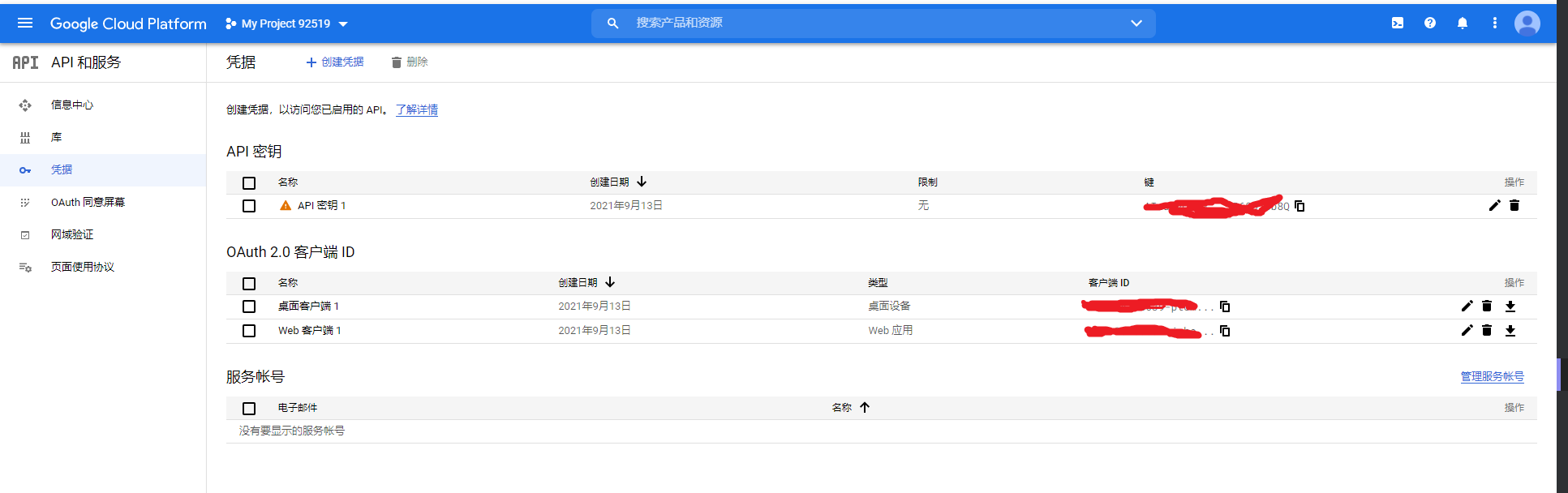

client_secrets_file = "client_secret_303198188639-ptcmpb7m0urubvl0mvoip8tp05tp8lv6.apps.googleusercontent.com.json"

flow = google_auth_oauthlib.flow.InstalledAppFlow.from_client_secrets_file(

client_secrets_file, scopes)

credentials = flow.run_console()

youtube = googleapiclient.discovery.build(

api_service_name, api_version, credentials=credentials)

videoId = '5YGc4zOqozo'

request = youtube.commentThreads().list(

part="snippet,replies",

videoId=videoId,

maxResults = 100

)

response = request.execute()

totalResults = 0

totalResults = int(response['pageInfo']['totalResults'])

count = 0

nextPageToken = ''

comments = []

first = True

further = True

while further:

halt = False

if first == False:

print('..')

try:

response = youtube.commentThreads().list(

part="snippet,replies",

videoId=videoId,

maxResults = 100,

textFormat='plainText',

pageToken=nextPageToken

).execute()

totalResults = int(response['pageInfo']['totalResults'])

except HttpError as e:

print("An HTTP error %d occurred:\n%s" % (e.resp.status, e.content))

halt = True

if halt == False:

count += totalResults

for item in response["items"]:

comment = item["snippet"]["topLevelComment"]

author = comment["snippet"]["authorDisplayName"]

text = comment["snippet"]["textDisplay"]

likeCount = comment["snippet"]['likeCount']

publishtime = comment['snippet']['publishedAt']

comments.append([author, publishtime, likeCount, text])

if totalResults < 100:

further = False

first = False

else:

further = True

first = False

try:

nextPageToken = response["nextPageToken"]

except KeyError as e:

print("An KeyError error occurred: %s" % (e))

further = False

print('get data count: ', str(count))

data = np.array(comments)

df = pd.DataFrame(data, columns=['author', 'publishtime', 'likeCount', 'comment'])

df.to_csv('google_comments.csv', index=0, encoding='utf-8')

result = []

for name, time, vote, comment in comments:

temp = {}

temp['author'] = name

temp['publishtime'] = time

temp['likeCount'] = vote

temp['comment'] = comment

result.append(temp)

print('result: ', len(result))

json_str = json.dumps(result, indent=4)

with open('google_comments.json', 'w', encoding='utf-8') as f:

f.write(json_str)

f.close()

if __name__ == "__main__":

main()

|